Rutgers Business School Holds Hackathon Based on New Google Machine Learning APIs

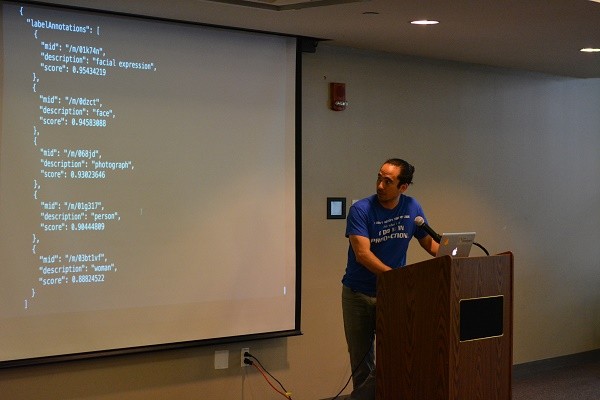

About 60 coders came to Rutgers University Business School in Newark for a two-day Hackathon based on recently released Google Cloud Machine Learning APIs.

The hackathon was organized by Rutgers student group Collective Entrepreneurs Organization (CEO), The Ideas Maker (Newark) and GDG North Jersey.

The event, which began on Oct. 22 at 10:00 a.m. and lasted till Oct. 23, 6 p.m., was sponsored by Google, DigitalOcean (New York) and The Ideas Maker. Eight teams formed and, at the end, five competed in the hackathon. The first prize was a $200 gift card for the team. The second prize was a Google Cardboard VR viewer and a Google Chromecast device.

The teams worked with new APIs released by Google. The Cloud Vision API enables developers to programmatically analyze the contents of an image by leveraging powerful machine learning models through a simple REST API. “It quickly classifies images into thousands of categories (such as ‘sailboat,’ ‘lion,’ ‘Eiffel Tower’), detects individual objects and faces within images, and finds and reads printed words contained within images,” says the Cloud Vision Web page.

The Cloud Speech API “enables developers to convert audio to text by applying powerful neural network models in an easy to use API,” says the Google’s Cloud Speech page. “The API recognizes over 80 languages and variants, to support your global user base.” You can transcribe text by dictating into a microphone or enable command-and-control through voice, among many other uses.

After getting an overview of how to implement these systems, the teams went to work.

- First prize was taken by Team Seeing Eye Dog, which created a “Visual Input Auditory Output App – Eye Assistant.” According to the team’s description, this Web application used the Visual API to do face and mood recognition. The app then provides auditory output to describe the mood of the subject. “This app will allow people with visual impairments to understand mood through facial expression, even if they are unable to see them,” said the team, which was led by Alicina Mermer.

- Team Semantix won second prize. Led by developer Mackensie Alverez, the team created a Web application called “CardNet,”which allows the user to take a picture of a business card. The app reads the content and parses it into the various components of contact information, such as name, company name and phone number; then it saves every business card recorded, along with all the contact information, in the user’s account. This will result in the user having a contact database of easily retrievable information, without having to carry around hundreds of business cards.

Other projects developed included:

- Team CIET, which created a food information app called “CIET” (Can I Eat This). It’s a Web application that lets users vocally input universal product codes (UPCs) and get nutritional facts and ingredients for the products. “This app will allow users to know exactly what they are purchasing and exactly what is in it,” the team said.

- Team InfoChat came up with an app for group chats with live info learning, they said. The chat application provides links based on keywords used by those participating in the chat. The program keeps on learning as the chat continues, making a record of the keywords and finding relevant context in the links.

- Team Deep Linking YouTube created a video-search app called DLY (Deep Link YouTube). “This Web application will allow users to search YouTube videos based on content and visuals and get deep links that will bring them directly to the part of the video they are searching for,” the team said. “This app will allow users to quickly find the part of a video that is useful to them, without having to search through the whole video.”