Three Different Perspectives on AI Provide Food for Thought at NJ-GMIS 2024 TEC

On April 18, the 2024 NJ-GMIS Technology Education Conference (TEC) welcomed 150 Garden State municipal, county and school district IT professionals to the Palace at Somerset Park to learn about how technology could make their jobs easier. The conference was sponsored by the New Jersey branch of Government Management Information Sciences (NJ-GMIS).

Keynoting the conference was Giani Cano, an expert in mentalism, who spoke about unlocking the power of focus to enhance decision-making. The participants were also able to talk to vendors who demonstrated their software at the show.

NJTechWeekly.com attended a session called “The Implications of AI within Local Government and Educational Institutions,” in which three speakers discussed their experiences with AI in the workplace and gave advice on how NJ-GMIS members could incorporate AI into their processes.

Bernadette Kucharczuk Urges a Guarded Approach

Bernadette Kucharczuk warns about AI pitfalls. | Esther Surden

On the cautious side of the discussion was Bernadette Kucharczuk, certified government chief information officer, recently retired from serving Jersey City as director of information technology.

Kucharczuk believes that there aren’t enough protections against AI using “your digital crumbs,” the information you give out freely on the Internet. “AI is already making decisions about you. That car insurance, right? FICO scores. All of these [decisions] are being based on your digital crumbs, the things that you’re doing, anyway. Patterns of human behavior influence the results of AI searches.”

While using AI to screen job applications may seem like a great idea because the hard work is being done by something that is completely unbiased, “your system is weeding out people with a high future likelihood of depression. They’re not depressed now. Just maybe in the future,” she said. It’s weeding out “women who are likely to be pregnant in the next year or two. What if it’s hiring aggressive people because that’s your workplace culture?”

She mentioned some colossal AI failures, including the use of algorithms to decide prison sentences and the alleged way the Facebook algorithm passed over the news of the events in Ferguson, Missouri, “after the killing of an African-American teenager by a white police officer under murky circumstances.”

Although AI advocates say that these failures will be addressed and corrected, she noted that it’s been two years since some of these things had happened, and nothing has been done. Her plea: Don’t rely on AI until these issues have been fixed.

“We cannot escape these difficult questions,” Kucharczuk continued. “We cannot outsource our responsibilities to machines. Artificial intelligence does not give us some ‘get out of ethics free card.’ Data scientist [Fred] Benenson calls this ‘mathwashing.’ We need the opposite. We need to cultivate algorithms, suspicion, scrutiny and investigation. We need to make sure we have algorithmic accountability, auditing and meaningful transparency. We need to accept that bringing math and computation to messy value-laden human affairs does not bring objectivity.”

Kucharczuk continued the presentation by quoting Techno-Sociologist Zeynep Tufekci.

Sandra Paul Sees the Practical Applications of AI as Essential

Sandra Paul speaks at NJ-GMIS TEC 2024 | Esther Surden

On the other side of the coin, Sandra Paul, director of information technology, Township of Union Public Schools, mentioned the boost in productivity that AI can provide. She said, “We have more than 7,800 kids and 1,200 staff. But I only have a staff of five people. We have 9,000 end points in the school system, including copiers, printers, security cameras, door access systems and telephones.” There are 500 preschool children, and each of those children generates 5,000 data points.

She added that there were many big data-management challenges that AI could address. She pointed out that every time a child gets in trouble, or has a problem, generates a data point. “Think about it. All these incidents happen at school that we have to keep records of.” In addition, special education records have to be kept forever. Employee records involving pensions also generate a lot of data, as does the email system. “We have a lot of compliance when it comes to the federal government and local and county requirements for disaster recovery planning,” for example. Is it any wonder that directors of technology for school districts use ChatGPT to write their disaster recovery plans?

The infrastructure at a school “requires monitoring alerts and notifications, application management, security management, server provisioning, server management and network management.” She noted that the security cameras are required by federal and state law to have the capability to track where you go in the building. It’s not a matter of someone sitting somewhere looking at a screen. AI is doing all the tracking. Paul added that the security cameras aren’t in bathrooms or locker rooms. “We already have enough cyberbullying,” she said.

Another area that AI is helping with is family communications. When there is a problem at school, there is a time delay between when the incident happens and when the parents are notified. Paul said that there was a chemical spill at one of her schools that set off the fire alarm, and the AI-assisted program she used was able to notify every child and parent on their cell phones. This system also automates compliance checking, based on the federal and state guidelines, so that Paul can make sure the district is complying with all applicable laws.

Marc Pfeiffer Warns of Policy Changes That Will Be Needed as AI is Adopted

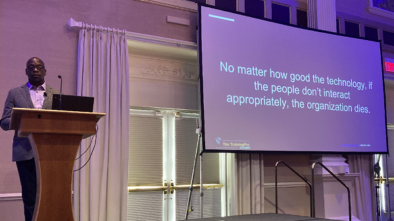

The last to speak was Marc Pfeiffer, senior policy fellow and faculty researcher, Center for Urban Policy Research, Bloustein School of Planning and Public Policy, Rutgers–New Brunswick, who also had a 37-year career in New Jersey local government. Pfeiffer spoke about the policymaking implications of adopting AI tools and infrastructure.

“AI is going to drag our management-focused IT administrators more and more into the world of public policy,” Pfeiffer told the group. “It’s an area you may not have had any training or education in, but it’s an area you are going to have to learn about.

“I want you to walk out of here with an understanding that this is something that is in your world now. Management is going to find that technology management and public policy are going to overlap,” he said.

It’s not just chatbots anymore. It’s machine learning and natural language processing. It’s neural networking. All of these technologies are coming together. AI includes robotic processes; business process automation; and computer vision, which is the ability to use cameras to see, translate what has been learned, and act on it.

“So, when it sees a mugging going on in a parking lot, you don’t need a police officer who’s watching maybe 300 cameras in that town to see what’s going on. They’ll be getting an alert because the AI has been trained to use that type of human behavior to give an alert, so they can dispatch quickly.”

“And then the newest one is ‘digital twins,’ which take in environments such as a traffic system, a water-treatment system, a wastewater-processing system, and sees all of the components of that digitally. So, you can model what happens when something goes wrong.” AI will be integrated into most digital-technology goods and services in three to five years, if not sooner, he predicted.

“When we talk about public policies, we are talking about the things that set a strategic direction, working within legal frameworks and the priorities for the government,” he said.

One example is the idea that you don’t have personal privacy when you are using your employer’s or a school system’s devices. That’s a policy decision. “But there are also decisions about how that is administered, and we have to figure out how to administer these better.”

Take the example of surveillance cameras in a public park or other public areas. “These are some of the public policy questions: How are they monitored? By AI or humans? How are incidents tagged and responded to? Any police department that just takes their facial ID match has a problem. Arresting a person based on that is wrong. And you’ll need to do a human identification on top of it because AI alone has been getting stuff wrong. It’s going get better, but you’ve got to do something to get human judgment in there.”

There are other implications, Pfeiffer said. For instance, under what circumstances is a public recording made available? And to whom? Think about the requests submitted under the New Jersey Open Public Records Act, and the exceptions to that policy. When videos are made available, the people who get them can put them on the internet. “We need new public policy because we haven’t thought about the value issues, personal security, crime prevention and public safety implications. You must deal with these policy issues in the next few years, as AI becomes more prevalent,” he said.

Pfeiffer’s talk stirred up many more questions in the audience, as members considered all the changes that AI will bring to their municipal and school IT practices in the future.